Built from First Principles: Why copper-rs works so well building robots with AI coding systems

When I first tried LLMs on the Copper code base itself, I have to admit it was an unmitigated disaster: Copper is a new way of thinking about what a runtime for robotics is, codex or copilot had absolutely no idea where I wanted to go, no examples to base themselves on and the more I tried to prompt my way out of this, the more spaghetti code it produced.

It almost made me a full on AI assisted coding skeptic. But as I was developing a new set of components on top of Copper for making a good platform to build drones on, it completely blew my mind, here is my analysis of why…

Good, principled Engineering will never go away.

Determinism and observability have always been the #1 non negotiable feature of Copper

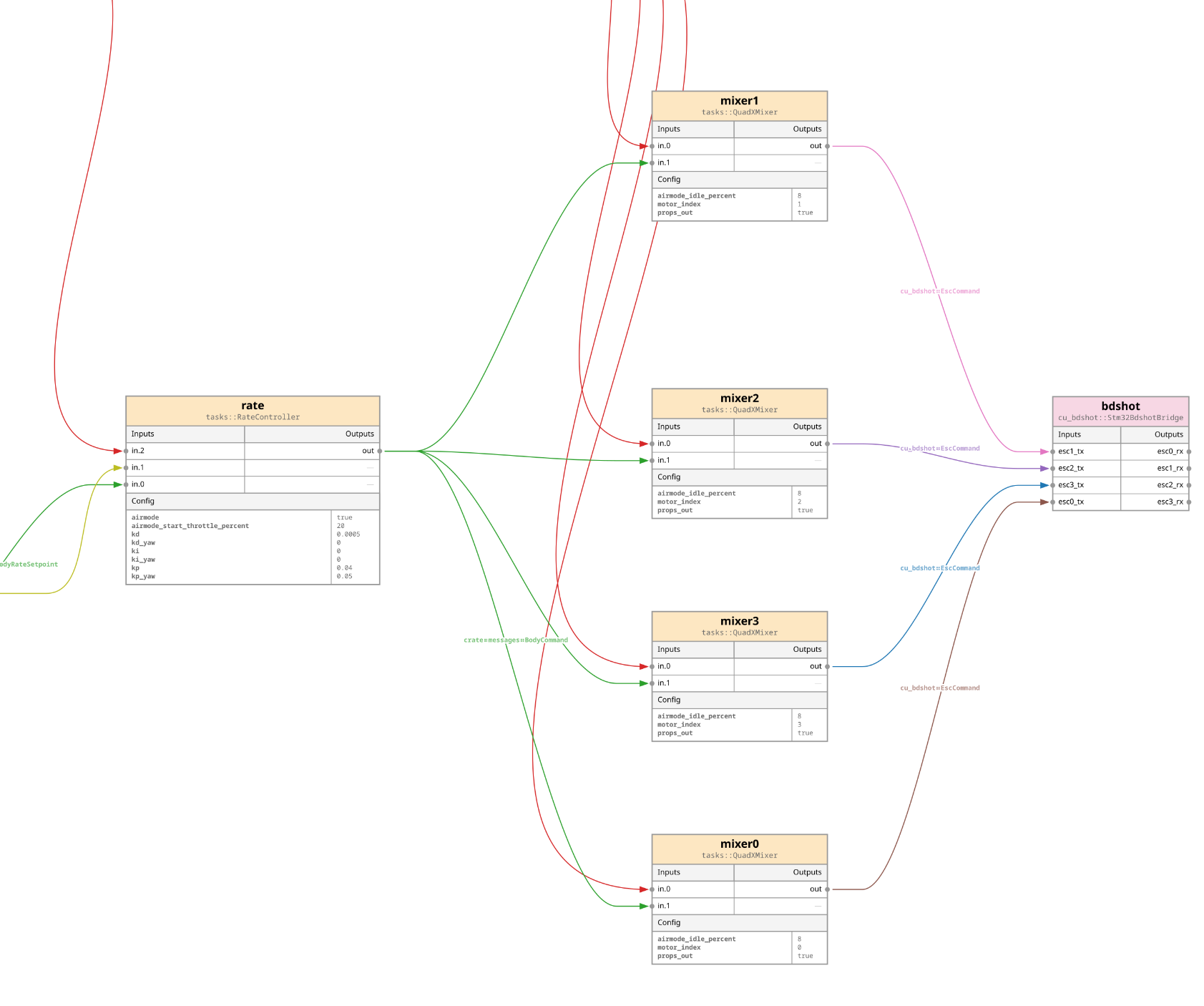

Copper can generate SVG diagrams of the structure of your robot. Here is the actuation part of our flight controller example.

For roboticists productivity: I have a saying that “if a bug is reproducible, it is already dead”. Most robotics frameworks including ROS do not guarantee deterministic replay so you won’t have any guarantee that you can reproduce a bug.

As a proof of safety: To use a dataset as a proof of safety in your safety case: it is easier if 1. you can prove that your robot always runs the same way out of the same data and 2. if you prove that your data coverage is good enough for your problem space. Proving that your robot safe like this is the only way you have if you integrate complex ML inference in your architecture.

Zero performance cost modularity

Robots are complex and already very untangled between physics, EE, OS, algorithms, you have unpredictable side effects. If you untangle also your robot code you are in for a very bad experience.

Robotics frameworks usually use a variant of microservice architecture to isolate pieces of the code from the rest of the system. But doing so usually adds a ton of overhead.

Instead of letting the system be randomly driven by an OS, Copper uses the same principle with that modular structure but uses it to basically build an OS around it!

This allows copper to optimize end to end your robot at compile time to squeeze everything possible from your underlying hardware.

It allows you to build smaller, more testable and discrete modules because that has virtually no execution overhead!

Copper is built on Rust with Rust and for the good reasons.

“If a bug is reproducible, it is already dead.”

Robots needs to be close system programming, see the 2 points I made earlier but when you ask non system programming specialists to produce performent algorithms with C++, you end up chasing undefined behavior in safety critical code all the time. As robots will get more useful being closer and closer to people, killing in the egg any stack and memory corruptions instead of discovering them maybe with some magic linter, worse on CI and even worse on the robot itself is a no brainer.

Copper 😼 & Rust 🦀 as a containment for LLM entropy

LLM has the tendency to produce too much code and locally fix things, this generates a bunch of spaghetti code if let loose across a large piece of code.

Copper has some very precise semantics about what a Task is with a very clear execution & memory model for anyone implementing them. Copper in debug mode can also monitor for the general realtimeness of the tasks with a custom memory allocator catching allocations made at the wrong moments and RTSAN.

Unit tests can emulate one cycle of the execution very easily so your small components by design can be basically designed by unit tests.

Added to this the memory opinionated Rust, if you give those expected inputs & outputs to your digital coding friend, it has almost no choice but to produce correct code.

LLMs excel at plagiarizing & translating so feed them with good examples!

With the structured nature of the tasks, natural patterns emerges, having built a set of task examples for any types helped a lot, you see the LLM catching patterns like forgetting less and less to deal with the Time Of Validity of the sensor data for example.

LLMs don’t work with a reliable feedback loop

LLM loves to hallucinate and if you don’t ask them to verify their work they will definitely take the easy route and say: “done boss! happy boss?” without even compiling their code! It is critical to rub their mistakes to their nose.

With Copper it is easy to make the AI coding agent start the robot in a simulation, log that deterministically then have the agent extract the logs (in JSON) and have them check the results with tools like jq to pinpoint any issues.

Same thing with actual logs from the real world, import them and ask you LLM to find the defect and work with it toward resolving it and extracting the step for deterministic reproduction is trivial.

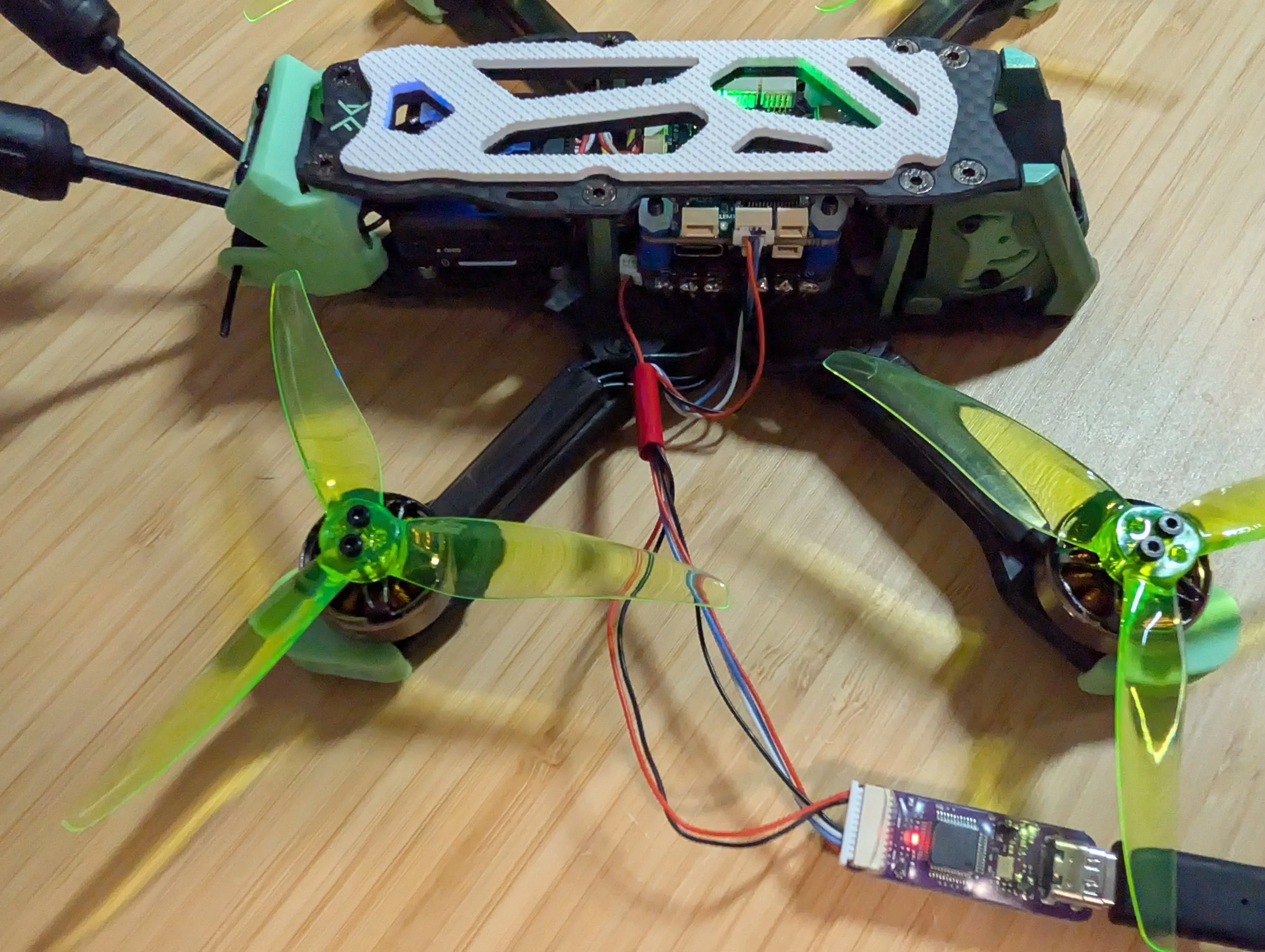

After a little bit of PID tuning this BMI088 basically worked flawlessly, here is its first flight

cu-bmi088: a fully AI coded component with Copper, implemented in one shot with Codex

The BMI088 is a kind of a classic IMU you can find it all types of robots, the context here is a flight controller for a quad copter

What was given as input:

a working example of another IMU (the mpu9250)

the expected output in the form of a message expecting SI units

a set of resources (this is a new type of component in Copper to expose hw and various system resources)

What this says about the future

FPV of Copper passing its first (very sketchy) powerloop

Large language models don’t change what makes systems robust. They don’t relax constraints, and they don’t replace discipline. If anything, they do the opposite: they amplify the consequences of whatever engineering choices were already present. In systems built on loose assumptions, implicit state, and weak boundaries, LLMs accelerate entropy. In systems built from first principles, they are forced to operate within real limits.

This is why the question is not whether LLMs will become better at writing code for robots, but whether our systems are structured well enough to tolerate probabilistic contributors at all. Determinism, decomposition, and enforceable invariants are not legacy ideas; they are the only way to keep feedback meaningful when behavior is no longer authored line-by-line by a human.

First-principles systems age well precisely because they constrain entropy. They make change observable, regressions attributable, and corrections local. This is critical in robotics, a discipline already riddled with externalities. That was true before LLMs existed, and it remains true now. Copper was not designed with LLMs in mind; it was designed to make complex systems observable, deterministic, and constrained. As a result, LLMs can be used without destabilizing the system, with their usefulness emerging as a side effect of those foundations rather than the reason they exist.

If you like what we are doing, please drop a star our repo on Github, you also want to test out Copper on your project join us on discord, we can help!

We also have a bunch of tutorials on our Youtube Channel to help you started.